When Wellness Meets Machine

Whether in healthcare or haute couture, the consumer seeks hassle-free experiences. But as Dr. Anushka Patchava explains, the future belongs to those who design for emotional resonance, not just efficiency

In a world increasingly powered by algorithms, artificial intelligence is no longer confined to back-end automation. It is curating our identities, shaping our preferences and even influencing how we connect with others. From luxury fashion to digital health, the central question is no longer what AI can do, but what it should do.

The Future Of Human Connection

As AI increasingly embeds itself into the fabric of daily life, from content feeds to wellness routines, human connection is evolving, and perhaps even being challenged. The ability to anticipate needs and hyper-personalise experiences is seductive, but it begs the question: what is lost in this drive for precision?

Intuition is being mimicked. Aesthetic tastes are being predicted. And yet, the more intelligent our machines become, the more intentional we must be about preserving the uniquely human. We must protect presence as the new luxury. Individual expression must not be flattened into homogenous preference patterns. And we must resist reducing experiences to efficiencies.

The most profound opportunities for the future – across retail, wellness or even relationships – will emerge from spaces, digital or otherwise, that feel deeply human. Empathy, vulnerability, imperfection: these are not inefficiencies. They are the hallmarks of meaning.

This trend is not confined to lifestyle. In mental health, AI-powered tools like Wysa offer 24/7 therapeutic dialogue in over 10 languages – not to diagnose, but to hold space for early disclosures. Empathetic design is baked into the logic: when crisis is detected, the system seamlessly hands over to a real therapist.

Lessons From Healthcare: Augmented, Not Artificial

AI’s impact on emotional connection in healthcare and wellness reveals the paradox at play: it can deepen, or diminish, depending on its use.

In clinical settings, AI is increasingly being designed to support—not replace—the human connection. Take ambient AI scribe tools like Nuance DAX or Abridge, now widely used across major hospitals in the US and UAE. These systems listen in on doctor-patient conversations and auto-generate clinical notes in real time, dramatically cutting admin and giving clinicians more time to be emotionally present. Emirates Health Services’ rollout across 17 hospitals was driven by a single aim: to “give doctors their patients back.”

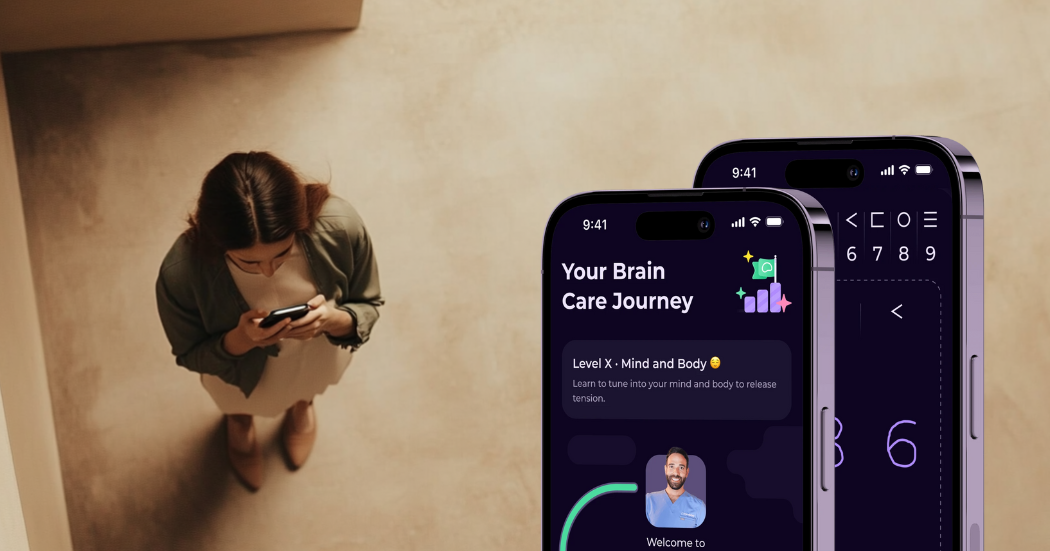

Meanwhile, mental health platforms like Mindstep are breaking stigma and enabling early, emotionally safe disclosures from users who might otherwise stay silent. And new AI “companions” such as Ebb by Headspace offer non-judgmental, emotionally intelligent dialogue between therapy sessions, trained in motivational interviewing and designed to make users feel supported, seen and understood.

Even large-scale chronic disease management is now handled by Lark Health’s AI nurse, which exchanged over 400 million messages with patients in a single year—volume equivalent to 15,000 human nurses.

The key insight? AI must never replace care; it must support it.

Luxury brands can take note. Just as AI scribe tools at the Cleveland Clinic reduce admin time and allow clinicians to be more present – boosting empathy scores – AI in luxury can serve as the silent enabler. It can take care of the noise so that stylists, advisors and experience curators can focus on presence, not process.

When AI amplifies human interaction, connection deepens. But when AI over-curates or manipulates, the customer may feel boxed in, predicted rather than understood. Seen, but not heard.

Tech’s Responsibility: The Emerging Duty Of Care In Luxury

In medtech, the ethical framework is clear: informed consent, transparency, safety and oversight. These principles aren’t just safeguards – they’re deeply human. And as AI in luxury becomes increasingly intimate – curating identity, shaping desire, guiding emotion – shouldn’t the same duty of care apply?

Luxury may not save lives, but it shapes them. Just as biometric wearables like the PieX AI pendant now discreetly track mood, stress and emotional states using on-device AI, brands are now intervening in how we see ourselves. Yet, without the frameworks of medtech, there is no requirement to disclose, explain or protect.

Apple’s 2025 update invites users to log their daily moods manually—encouraging emotional reflection without surveillance. Meanwhile, Oura’s new Readiness and Stress scores give wearers a high-level sense of balance and burnout, turning raw data into meaningful emotional insight.

But without the frameworks of medtech, there’s no requirement to disclose, explain or protect. Dior’s AI SkinScanner, LVMH’s personalised AI styling, or sentiment analysis across e-commerce platforms all touch the edges of identity and emotional wellbeing. These systems can reinforce aspirational ideals—but also vulnerability and insecurity. If we acknowledge that AI can shape the mind, then ethical responsibility cannot be ignored.

The modern consumer deserves clarity:

How is this algorithm influencing me?

Why am I being shown this product, story or identity?

Is my emotional data being used to serve me—or sell to me?

Emotional Intelligence As Currency

Can AI create authentic emotional anchors? To a degree, yes. AI-generated music, conversational bots and mood-based recommendations can trigger real neurological responses. But emotion anchoring – the type that builds loyalty, memory and meaning – still relies on authenticity, context and human mirroring.

Emotional resonance isn’t just about stimulation – it’s about recognition. That moment of ‘I see you. I feel you.’ In luxury, that might be a fragrance that evokes memory or a perfectly curated in-store moment. AI can support this, but it should not simulate it without disclosure.

Surprise and ambiguity have always been part of the luxury journey. But trust is now part of that journey too. And in a world where AI curates aspiration, brands must disclose how these systems work – not to dilute the magic, but to deepen the integrity.

Designing The Future: What Luxury Can Learn

Luxury brands are entering a new era – not of more, but of meaningful. And AI must evolve from being a tool of precision to an enabler of presence.

Here’s what that looks like:

1. Tech should elevate, not obscure, emotion

AI should feel like a mirror, not a mask. Generative design and predictive feeds must guide, rather than trap, the consumer. Discovery, surprise and agency should remain.

2. Time is the new trust

AI can create efficiency – but the real value lies in what is done with that time. Whether it’s better storytelling, slower shopping rituals or deeper advisory moments – emotional richness must be reinvested.

3. The future is sensory

AI in healthcare is being used to enhance sensory rehab and emotional response. Luxury must take note – curating not just journeys of convenience, but sensory, emotional landscapes that move people.

4. Transparency builds loyalty

A majority – 78 per cent – of consumers want brands to explain how AI is used. Hiding the algorithm may feel sleek, but it risks long-term erosion of trust. The future is not invisible; rather, it is beautifully visible.

5. Regulation may not exist – but responsibility does

Just because a sector is not regulated doesn’t mean it is exempt from ethics. In a world where AI nudges emotion, identity and desire, brands must ask not just what they can do, but what they ought to do.

In both health and high fashion, the most powerful experiences are those that leave a lasting emotional residue. And in an age saturated with AI, the deepest form of luxury will not be what is most personalised, but what is most human.

In the end, the greatest privilege may not be convenience, but the time, space and attention to feel something real.

Dr. Anushka Patchava is a C-suite healthcare leader and entrepreneur, formerly Deputy Chief Medical Officer at Vitality. She co-founded Wellx, an award-winning insurtech disrupting the Middle East’s health insurance market, and currently serves as Chief Growth Officer and Chief Clinical Safety Officer at UK-based mental health start-up Mindstep. Both companies were selected for Google’s inaugural AI for Health accelerator in 2023. With a career spanning global strategy and innovation roles across the health insurance and pharmaceutical sectors, Anushka advises the UN and World Economic Forum on AI and healthcare policy, and supports private equity firms on healthcare M&A and tech investments. Recognised as one of the Top 5 Women Business Leaders in 2024 and a Top 10 Global Chief Medical Officer, she holds degrees from Imperial College, Cambridge, Harvard, and an Executive MBA from London Business School.

Image sources: Instagram, Instagram, Dove, Midjourney, Mindstep

Continue Reading

The Paradox of Progress

Checking in with… Walter Pasquarelli

Trust Funds

Luxury’s Learning Curve

Why We Want, What We Want